Real Time PowerBI Analytics

Posted in :

Every user wants data faster, while the volume and velocity of data only increases. How can one make sure this data is ingested, transformed, and displayed so users can quickly act based on the newest info. In a PowerBI environment that can be a bit cumbersome.

In 2019, had a client that was going to send us transactional data that we wanted to automate ingestion and ETL of which then was being used in a PowerBI report. By default, PowerBI Premium allows you to schedule dataset refreshes only on the top and 30 after the hour. Meaning you get 48 refreshes per day (Standard allows for 8 refreshes per day), but not on a schedule you control, so to achieve this was a bigger challenge than I expected.

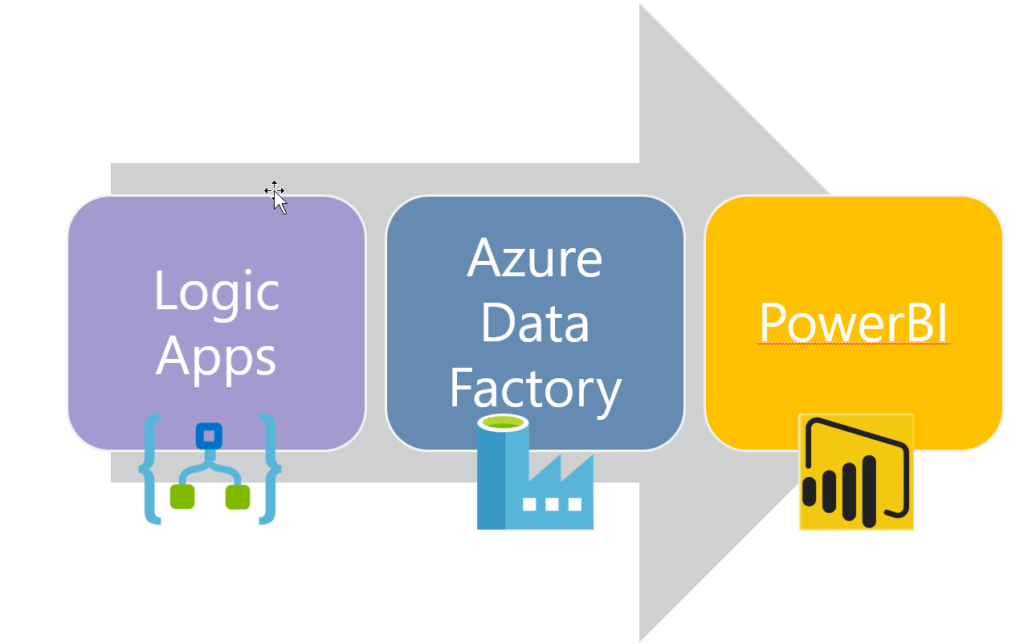

My first (and so far only) SQL Saturday presentation was on how I used Azure Logic Apps, Azure Data Factory, and the PowerBI API to solve this and wanted to share. (There has been some changes in last 12 months, so will try to call those out. )

Azure Logic Apps

Logic Apps is the low/no code event driven app service for Azure (Microsoft’s cloud platform). It is the enterprise big brother to what was Microsoft Flow, now Power Automate. It is built to allow you to define a workflow where if this happens then do that.

- Every Logic App must have at least one trigger

- Trigger can be a schedule

- Logic Apps only “Fires” When Trigger has positive result, i.e. when new file exists.

- Only cost is when a trigger fires

- Over 200 connections, Multiple activities

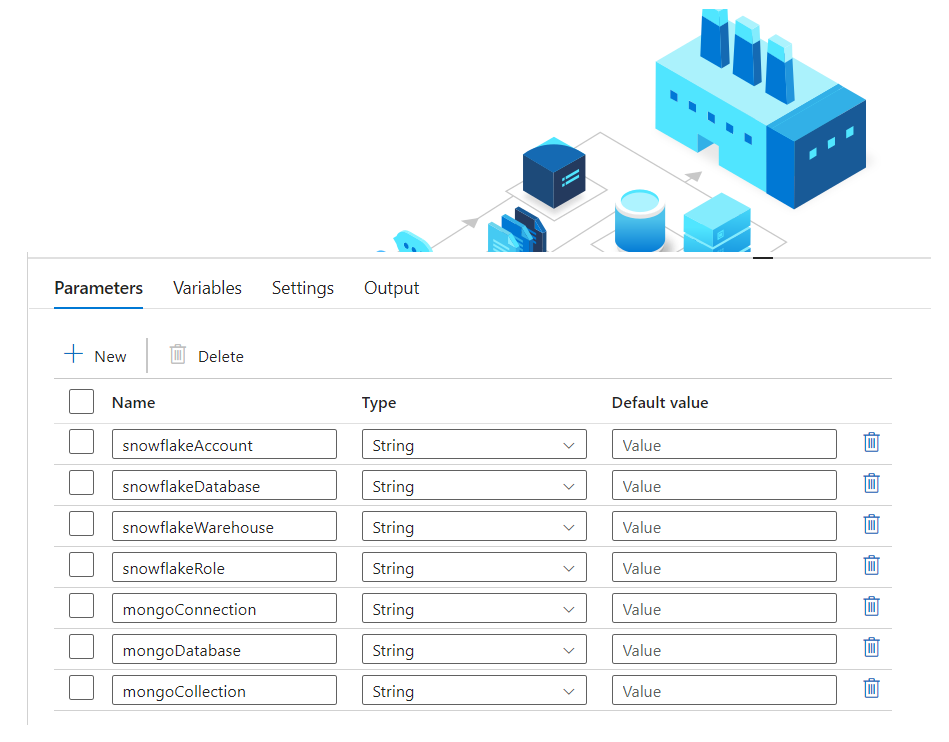

Azure Data Factory

Azure Data Factory (v2) is a data orchestration engine to allow for easy data movement for Extraction Transformation Load (ETL) / Extraction Load Transformation (ELT) work loads.

- Can be drag and drop, code free

- Can interact with both cloud and on prem data sources

- Variety of data connectors, SQL, Oracle, Big Data, NoSQL, etc.

- PaaS – Only pay for run time

- Many transformations available in “data flows”

- ADF has native triggers, but are optional

PowerBI API

PowerBI is the Microsoft reporting/BI development tool. PowerBI REST API allows you to interact with the PowerBI service programmatically.

Building Work Flow

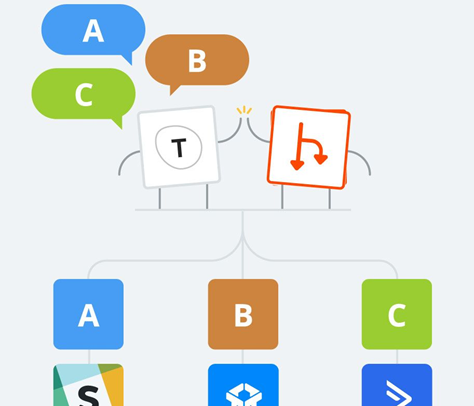

Logic Apps constitute the glue between all the parts. There is a bit of a backwards process in how you develop, versus how you will use the tools. The workflow runs like this

But to develop, you have to have the ADF Pipeline built out, for Logic Apps to call it, and you have to have the PowerBI dataset created and the API connection created before you create the Logic App to call it.

Development Steps

- Develop ETL/ELT data pipeline

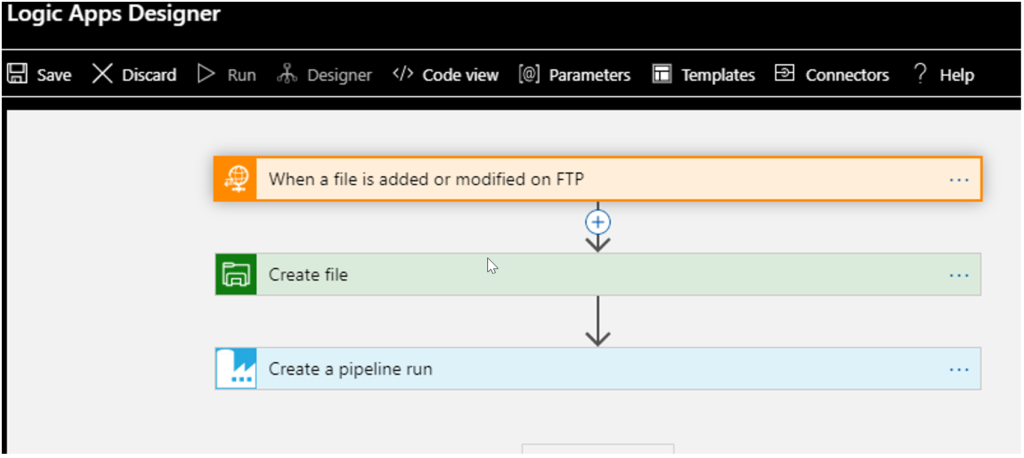

- Create Logic App to be triggered by new file that will call ADF pipeline

- Create PowerBI dataset that will be refreshed

- Create PowerBI Custom API Connector (now this step may be skipped, see below)

- Create Logic App to be called by ADF and in turn call PowerBI dataset refresh

In my case, the file was coming in via FTP, but could have been coming via email, service bus messaging queue, etc.. The Logic App has a check for new files on FTP trigger. However, I found it a bit tricky to work with because it only looked for “new” files and I had no control over what it thought was new. I have found it easier to have the trigger on a timer, and then only proceed if a file exists, yet that mean I am paying for more triggers, even if not activities (remember you are paying per use). Once there was a file that met the condition, it would call my ADF pipeline.

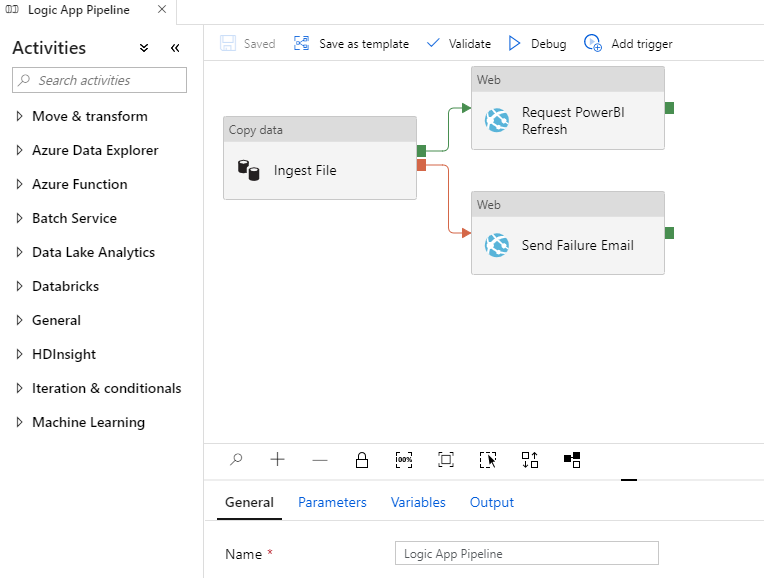

The ADF pipeline took the file from FTP, copied it to a blob (originally I had the copy to blob in Logic Apps, you can do either), ingested the file to a staging table in SQL, transformed the file and then wrote to a final reporting table. There was minimal work being done, but your pipeline can be as complex as you want.

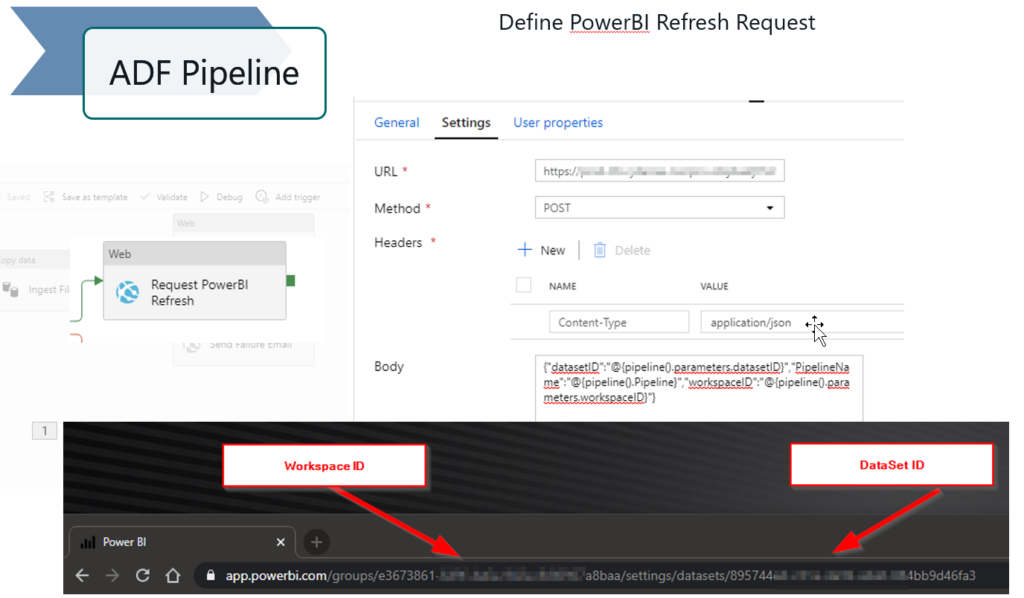

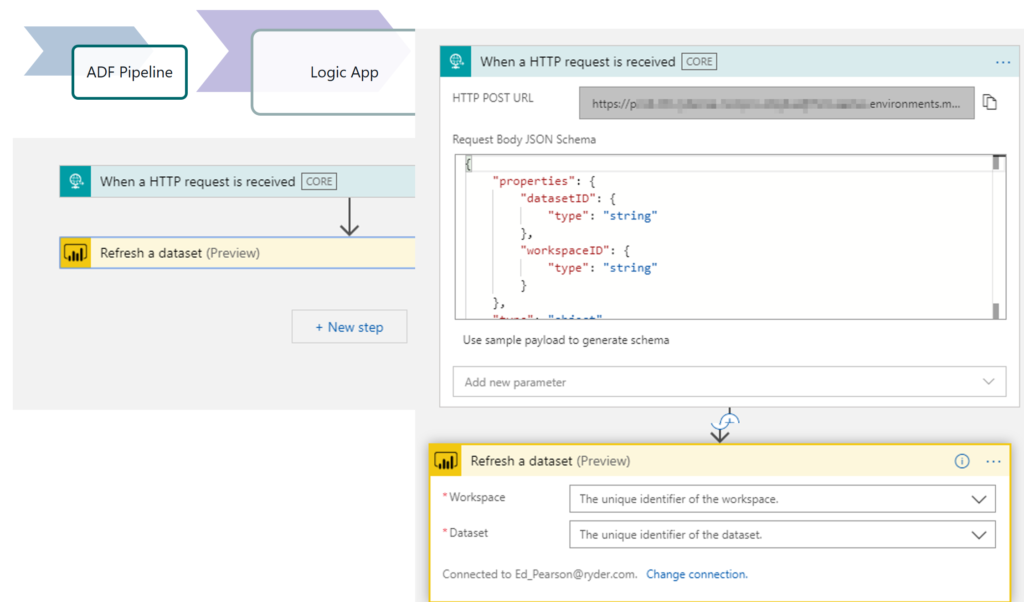

The second Logic App has an HTTP trigger. When creating and saving the Logic App it will create an endpoint URL for you to call from whatever upstream service you using, in this case ADF. You must have some kind of JSON body you supply to the Logic App (I find this website super helpful to convert your JSON to a valid schema). You can make it generic, but for mine I have it take in PowerBI Workspace GUID and Dataset GUID, that way I could reuse it for multiple pipelines across the team.

Originally, when created in 2019, to call any activity in the PowerBI API, you had to create a custom connector. Koen Verbeeck had created a very good series explaining how to use Logic Apps in ADF and how to create a PowerBI API connector. This was actually the part that took the longest for me, so if you need to use API, highly recommend his posts. (I also use his series to create notification Logic App in my ADF pipelines as you can see above)

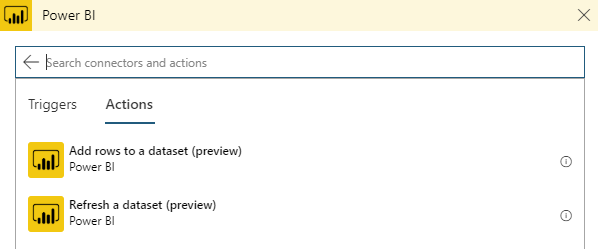

However, as with everything Azure, things change quickly and before I gave presentation in March, 2020, the folks at Logic Apps had added two PowerBI connector activities, and they are the only ones you need to do this workflow, specifically refresh a dataset. The connector still needs you to authenticate and you will need to have access to the workspace and dataset you want to refresh.

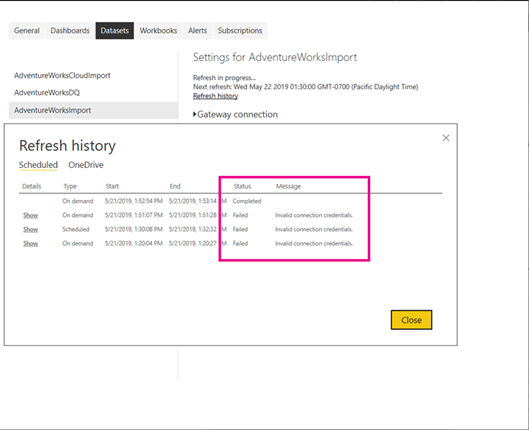

Once you think you have triggered your import model dataset refresh, you can go out to the workspace and see if it is running. Keep in mind, if you are on PowerBI Premium Capacity you can call the API for refresh an unlimited times per day, however, that is not true on standard workspace, so check with PowerBI admin.

On the PowerBI refresh, you’ll see that when you trigger a refresh it shows up as manual, just like if you clicked the button in the UI. It also will not allow you to request another refresh if one currently running, when you do, your Logic App will register as a failure. Lastly, it run asyncronously, and it has no idea once submitted successfully if refresh completes. To check status of refresh you will have to make another API call, one that you will need the custom connector for.

You can change this workflow as needed, but hopefully this gives you an idea to closely couple your ETL/ELT needs with PowerBI updates so users can see new data soon after you get it.

Would love to hear comments or other ways you have used these technologies, comment below or hit me up on Twitter or LinkedIn.